Are we asking the right questions about differentiation in Ontario higher education? A look at the HEQCO report (Part 1)

When thinking about systems of higher education three books that I often return to are Burton Clark’s The Higher Education System, Diana Crane’s Invisible Colleges, and Steven Scott’s Seeing Like a State. All three provide insightful prisms through which to view HEQCO’s most recent discussion of differentiation.

HEQCO for at least the last half-dozen years has been ruminating about differentiation. So has the province, but for even longer. Depending on how one counts, at least five provincial committees and commissions have taken up the question of differentiation since the late 1960s. That works out to about one commission per decade.

Some proffered sound advice. Some ducked. But, like the weather, in the end no one has done anything to restructure the system or, as some well-informed cynics might say, even accept that there is a system. The track record is one of advice proffered and advice denied. Will HEQCO do any better? A puckish observer might with some reason say that The Differentiation of the Ontario University System: Where we are and where we should go? is a sequel of a sequel of a sequel, of another sequel, and so on.

A “strong central hand” in Ontario?

The first section of the report is almost a separate report. It is a hortatory exegesis on the virtues of centralized plans and systems. This is where Clark, Scott, and others who have thought about the role and efficacy of systems come into play. HEQCO’s vision, which, by the way, arises from the data and analysis in the rest of the report, is remarkably simplistic: there is the state and there is the university, either singularly or collectively. There are no other interests, stakeholders, or socio-economic forces in the equation.

The report says, correctly, that an “exclusively or overly centralized approach has little chance of success” but its vision of what systems are, how they work, and how they affect institutional behaviour is centralized and exclusionary. After transubstantiation, the deepest mystery may be the belief held by successive Ontario governments and apparently now HEQCO that it is possible to put less skin into the higher education game and at the same time claim larger stakes. There is a lot of retro-think in the report.

The report in accurately exposing the disadvantages of homogeneity calls for a “strong central hand” as a necessary means of counter-acting the tendency of “institutions [to] drift towards homogeneity more than . . . strive for diversity.” This is a hard pill to swallow. Why? There are three reasons.

First, this report and its immediate predecessor – Performance Indicators: A report on where we are and where we are going – describe a system that is diverse in institutional performance if not in form. This report goes even further in that direction.

Second, although the concession is somewhat back-handed, the report itself confirms what virtually everyone else has known for some time: Strategic Mandate Agreements so far have done little or nothing to change the status quo.

Third, and most seriously mistaken, is to blame the universities for homogeneity. One has to look no further than the mechanics of the graduate expansion program, the Access to Opportunity Program, and the funding program to accommodate the double cohort to see that Ontario has persistently promoted homogeneity. The report itself describes the province’s funding formula and tuition policy as “forces of homogenization.” Ontario’s universities have not drifted; they have been fiscally manipulated. A more sophisticated appreciation of higher education systems, Clark’s, for example, would reveal the differences between institutional drift and responsiveness to legitimate forces other than the state.

Having said that, I think that the report misses a key question that arises from its own data and its description of the funding formula. How is it that the report and its predecessor report can identify extensive differentiation of performance in the face of “forces of homogenization”?

What the funding formula does – Homogeneity or heterogeneity?

Two facts about the funding formula are either often overlooked or misunderstood. The first is that the formula funds programs; more specifically It funds degree programs. It does not fund institutions. The operating grant that a university receives is based almost entirely on the sum of funding generated by each degree program. Thus a nominally homogenous formula can and does result in heterogeneous grants. The second is this statement that has appeared in every Operating Grant Manual since the formula’s inception:

It should be noted that the distribution mechanism is not intended to limit or control the expenditure of funds granted to the institutions, except in the case of specifically-targeted special purpose grants.

In other words, how a university spends its operating grant need have nothing to do with how that income — grant and fees – was generated by the formula. Adding this to programs like the Ontario Student Opportunity Trust Fund and the Ontario Research and Development Challenge Fund, both of which required matching funding from the private sector, and looser regulation of international student tuition fees one should ask why the report concludes that the degree of differentiation is “surprising.”

It should not be a surprise at all. It in practical effect belies the conception that a system can be built on an overly simplified and centralized two-sided relationship between state and institution.

Indicators: Questions that need to be asked

“Where we are and where we should go?” sounds something like “the Once and Future King.” I really admire the work that Martin Hicks and Linda Jonker have done in this report and its predecessor. Another of their reports, on teaching loads and research output, was equally splendid. It could have played a larger role in this report. Theirs is an uphill struggle in a jurisdiction that is data poor and sometimes secretive about data that exist but are not accessible. Thanks to their efforts we do indeed know a lot more about where we are, even if the report overall is a bit fuzzy about why we are where we are.

Instead of discussing the indicators one-by-one, let’s look at them from the perspective of the report’s five over-arching observations. The first one is addressed below, and a subsequent post will discuss the others.

Are these the right data?

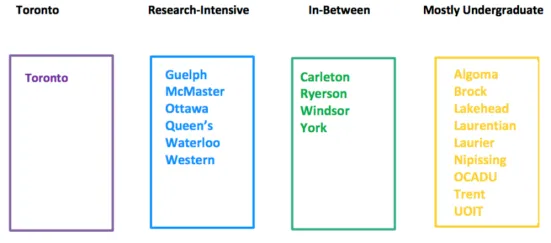

There are two different ways of thinking about this question. Are these the right data to validate inductively the type of system and “clusters” that the report proposes? Or are these the right data to inform deductively the design of a new system that does more than rationalize and formalize the status quo?

The answer to the first version of the question is more often “yes” than “no” but there are some surprising omissions. For example, with regard to equity, data about institutional spending for need-based financial aid would be a useful means of validating the “equity of access” of each cluster. We know where under-represented students – some of them — are now, but we do not know much at all about how they got there.

What were their choices? What offers did they receive? In other words, we need to know more about choice of access. The absence of data about yield rates is an omission, albeit one that the report acknowledges. Nevertheless, it would be helpful from a system differentiation perspective knowing what the “right data” should be.

The second version of the question is the more complex because the report does not tell us what other system models, if any, were considered. There is curious footnote that might imply that the system proposed in the report is like the California system. It isn’t. Would a Canadian version of the Carnegie taxonomy work? We don’t know, but as an analytical exercise it could have been done.

The report talks about sustainability. Question: using the proposed “cluster” model, how volatile has the nominal system been? If the same data sets were used to recalculate the institutional assignments for, say, 2011, 2006, 2001, and 1996, would the results be the same? If they were different a question could follow about factors that change institutional performance.

A more specific version of this question would ask about the capacity of the “regional” and “mostly undergraduate” clusters to have accommodated, for example, all increased demand for access generated by the double cohort? In terms of capacity, what is the statistical fit between the proposed clusters and the surplus teaching capacity identified by the earlier report on teaching and research?

A big category of data missing, regardless of which version of the question one asks, is about the fiscal status of universities in each cluster. In other words, what is the statistical relationship between fiscal differentiation and “performance” differentiation? Using COFO-UO data, the average and institutional distributions of income and expense would fit the wonderful five dimensional displays already used in the report could be compared.

Since HEQCO now prefers “regional” instead of “in between” to categorize some universities, are more data needed about the rate of students studying away from home? The UK and the United States, jurisdictions not unlike Canada and Ontario in terms of university education, have much higher rates of this form of student mobility than we do. (Thanks to Alex Usher for pointing this out.)

Why? Is “regional” a synonym for what “catchment” meant previously for colleges? To what degree does the definition “regional” depend on mobility? What if the Ontario rate was higher? In terms of equity of access, are there variations in mobility? Is there a possibility that “equity of access” per se could rise but with ghettoization as an unwelcome by-product?

The next post will discuss other questions raised in the report such as How robust are the data? Do the data reveal, and is this study about, university performance? Does size matter?